While analytics running on surveillance cameras isn’t new, the combination of lower thresholds for developers, robust cameras with outstanding processing power, and deep learning, is unlocking a new generation of analytics on the edge, limited only by the imagination of developers. We caught up with Mats Thulin, Axis Communications’ Director of Core Technologies, to get his view on the benefits today and in the future.

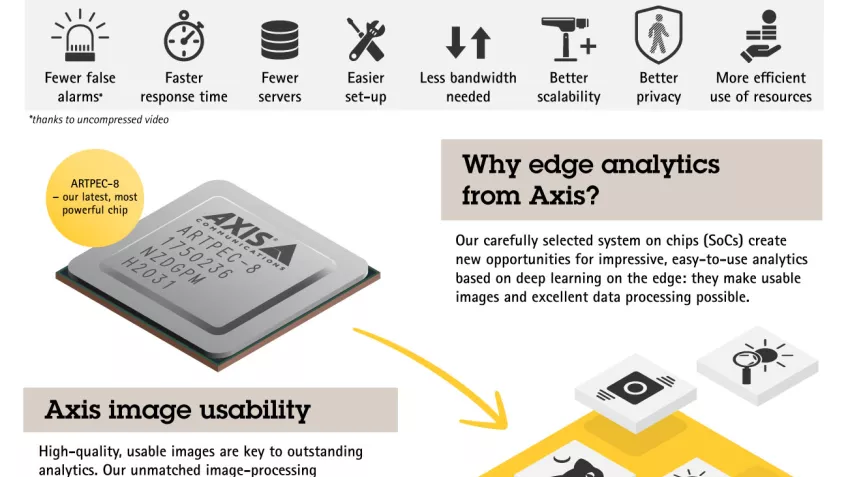

The arrival of ARTPEC-8, the latest generation of the Axis system-on-chip (SoC), represents a new era of deep learning analytics at the edge of the network. Providing analytics such as real-time object detection and classification, processed within the camera itself, ARTPEC-8 is the accumulation of over 20 years’ development.

While in-camera analytics isn’t new – video motion detection was a feature of Axis cameras more than a decade ago – the combination of superior image quality and deep learning is transformational in analytics capabilities.

Reducing the burden of analytics on the server

“A decade ago, surveillance cameras didn’t have the processing power needed to deliver advanced analytics at the edge of the network, so there was a natural gravitation towards server-based analytics,” says Mats Thulin, Director of Core Technologies, Axis Communications. “But while processing power on a server is plentiful, other factors can present challenges. Compression of video images before transfer from the camera to the server can decrease the quality of the images being analyzed, and scaling solutions where all analytics takes place on a server can become a costly exercise.”

This is particularly the case when deep learning is used within analytics. Mats continues: “Analytics employing deep learning is a compute-intensive task. When running purely on servers, you need to encode and then decode the video, which takes additional time before you can process the stream. Processing a number of video streams, even in a relatively small system with 20-30 cameras at 20 or 30 frames per second at a high resolution, requires significant resources.”

“The processing power and capabilities of our edge devices are now at a level where the analytics function is migrating back to them,” adds Mats. “And more analytics within the camera itself reduces the need for compute-intensive server- and cloud-based analytics. Importantly, it also means that analytics is applied to images at the point of capture, meaning the highest possible image quality.”

Images optimized for machines and humans

Advances in image quality take into account the fact that images are increasingly being ‘viewed’ by machines instead of human operators, an important distinction as machines do not view images in the same way as humans.

“We can now ‘tune’ video images for analysis by AI, as well as optimizing for the human eye,” explains Mats. “For example, when tuning an image for an operator, you typically aim to reduce noise. But for AI analytics, noise reduction isn’t as necessary. The objectives in tuning the image for humans or AI analytics are different, so using both in parallel will arrive at a higher quality result.”

Improved system scalability

Analytics at the edge can reduce the amount of data sent across the network, creating efficiencies in bandwidth, storage, and servers. That’s not to say that server-based analytics don’t still have a role to play. Far from it. Ultimately, hybrid approaches that use the strengths of edge analytics and server- and cloud-based analytics will become the optimal solution.

“Sharing the processing load between the edge and server will make systems much more scalable, because adding a new camera with an edge analytics capability means you wouldn’t need to increase server processing power,” explains Mats.

While standalone edge systems will continue to be used for scene analytics applications – creating real-time alerts based on object recognition and classification – Mats believes that hybrid server and edge analytics solutions will become increasingly dominant for advanced processing requirements.

This is enabled by the metadata created by edge analytics alongside the video images. Metadata – in the context of video – essentially tags elements in a scene. In other words, it adds descriptors or intelligence about the scene, rather than just the raw video footage of the scene. The video management software (VMS) can use the metadata to act upon the scene, triggering actions in real-time or post-event, as well as searching for elements of interest or making further analysis.

Protecting privacy

Edge analytics brings benefits that can help support privacy, while also delivering security, safety, and operational benefits. For example, edge-based people counting analytics – useful in a retail environment – will only need to transfer data from the camera rather than video images.

But edge analytics can also protect privacy even when video images are transferred.

“Intelligent masking, where the faces of people in a scene can be blurred, means that privacy is maintained. By sending the original stream in addition, which is only viewed when necessary, this means that if there’s an incident, operators can still access the un-masked video,” says Mats.

A new world of computer vision applications

Alongside the increased capabilities of hardware powered by ARTPEC-8, enhancements to the Axis Camera Application Platform (ACAP) are giving a broader group of developers than ever before the chance to create computer vision applications in familiar environments. Now in its fourth generation, ACAP includes industry-standard APIs and frameworks, as well as support for high-level programming languages.

“The changes in ACAP version 4 mean we’re seeing an increase in development partners from a different background to the traditional surveillance sectors,” confirms Mats. “ACAP is based on open, familiar components for developers coming from a cloud or server background. We’re pushing the ONVIF metadata specifications for an open environment where developers can create hybrid architectures with different ONVIF conformant products. This is one of the key enablers in getting a hybrid system and architectures to work.”

The development in analytics detection capabilities is also assisting security developers and innovators from wider sectors alike.

“Advances in object detection, with cameras increasingly developing contextual understanding of a scene – differentiating between a street, a lawn or a parking lot, for example – will help us to implement more accurate analytics on the scene and other objects within it. This knowledge will bring another level of innovation,” says Mats. “The new potential for edge analytics is huge, for our existing partners, developers new to computer vision applications and, of course, to our customers.”